An independent study of YouTube algorithms recently came out, claiming that YouTube algorithms were acting in a ‘bigoted’ manner, targeting videos with the word ‘Gay’ or “Lesbian’ in the title and demonetizing them.

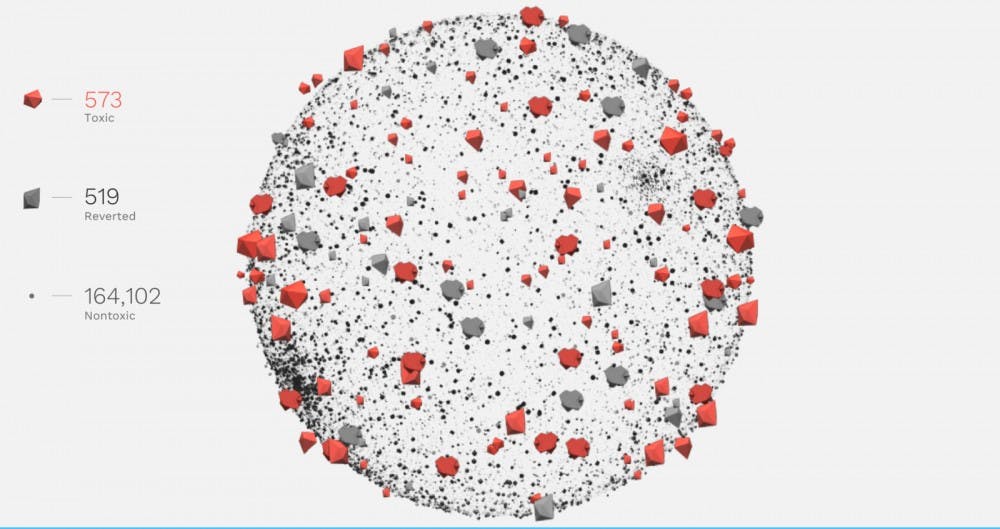

This study, led by three YouTube-centric researchers came out on Monday, September 30 when Sealow, the Ocelot AI CEO; Andrew of YouTube Analyzed channel; and Een of Nerd City gathered together to begin investigating reports that YouTube's algorithm automatically demonetized certain words, causing the creator to lose their ability to gain income from that video via advertising revenue. Each of the aforementioned creators eventually decided to run specific videos on the topic, but each one was relying on the same dataset:

As expressed by The Verge:

The trio of researchers eventually posted the list of words onto an Excel document that is available to the public for reading and reproduction.

Each video was kept on a simple format, with minimal content or images that would trigger the bots. They were designed specifically to test the effect of words on it.

As they began to dig into the particulars, they found that pro-LGBT content, most notably gay, lesbian, progressive, and liberal were quickly demonetized, as were keywords like ;You, that, abuse, needle, Shrek, Indonesia, and many others. Some demonetized words were clearly political in nature, such as Afghanistan or abortion, while others appeared to hold double-entendre/sexual meanings, such as penis or nipple. However, terms like conservative or Republican did not trigger the software, implying an implicit bias in the bots regarding the political spectrum, or at least realizing patterns of content creation which lead directly to dissent and tension for advertisers. The research also found that swapping words like gay for happy immediately caused a change in the data itself.

In the cases of these videos being demonetized, creators do have the option to resubmit them so that a human re-evaluator can handle the case themselves and determine if this was a fair analysis by said bot.

YouTube has denied multiple times that their bot software targets these keywords. YouTube CEO Susan Wojcicki stated in an interview that “We work incredibly hard to make sure that when our machines learn something — because a lot of our decisions are made algorithmically — that our machines are fair… There shouldn’t be [any automatic demonetization].”

But despite the CEO’s words, there still is said demonetization going on.

These developments occurred a month after a number of LGBT YouTubers filed a lawsuit against YouTube for their discriminatory acts.

Sources: Nerd City, Sealow, Ocelot, YouTube Analyzed, Excel, The Verge, Verge

Image: Wikimedia